品质至上,客户至上,您的满意就是我们的目标

当前位置: 首页 > 新闻动态

科学家利用Videometer多光谱成像系统发表辣椒病害表型研究文章

发表时间: 点击:238

来源:北京博普特科技有限公司

分享:

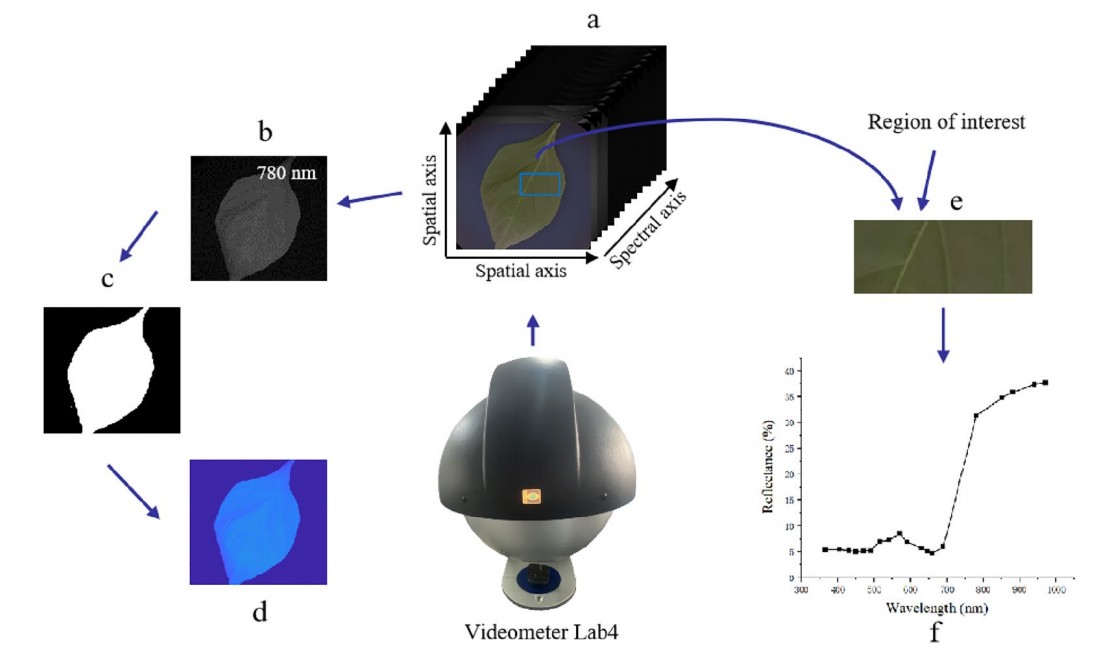

刚刚,来自中国的科学家利用Videometer多光谱成像系统发表了题为“A CNN model for early detection of pepper Phytophthora blight using multispectralimaging, integrating spectral and textural information”的文章,文章发表于知名期刊Plant Methods。VideometerLab多光谱成像系统可用于植物病理表型组学、植物病害光学指纹图谱采集和分析,也可用做植物病理学通用成像平台。

基于多光谱法整合光谱和纹理信息早期检测辣椒疫霉病的CNN模型成像,

摘要

背景

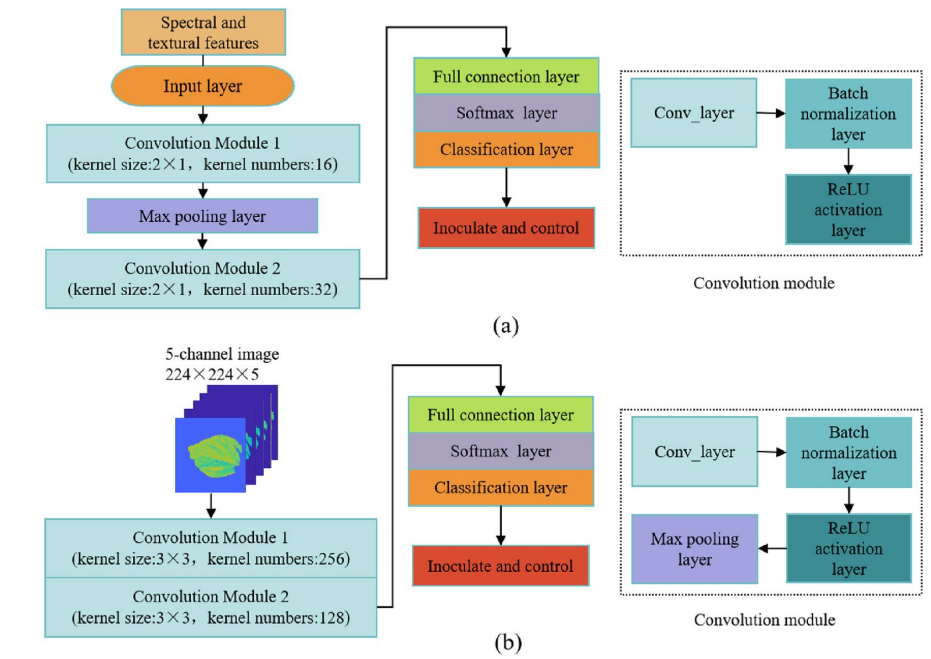

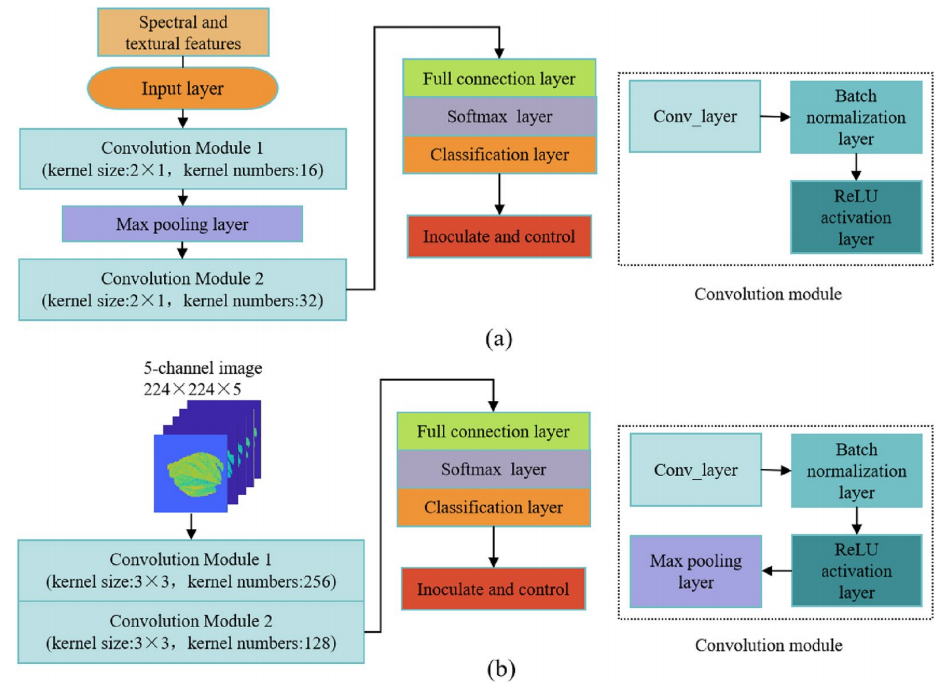

辣椒疫霉病是辣椒生长过程中的一种毁灭性疾病,对辣椒的产量和质量有重大影响。准确、快速、无损地早期检测辣椒疫霉病对辣椒生产管理具有重要意义。本研究探讨了利用多光谱成像结合机器学习检测辣椒疫霉病的可能性。将辣椒分为两组:一组接种紫萎病,另一组作为对照未处理。在接种前 0 小时和接种后 48、60、72 和 84 小时收集多光谱法师样品。利用多光谱成像系统的支撑软件从19个波长中提取光谱特征,利用灰度共生矩阵(GLCM)和局部二元模式(LBP)提取纹理特征。采用主成分分析(PCA)、连续投影算法(SPA)和遗传算法(GA)从提取的光谱和纹理特征中选择特征。基于有效的单谱特征和显著光谱纹理融合特征,建立了两种分类模型:偏最小二乘判别分析(PLS_DA)和一维卷积神经网络(1D-CNN)。基于PCA从光谱数据中提取的五分量主分量(PC)系数,利用主成分合成材料(PCA)进行加权,并与19通道多光谱图像相加,构建二维卷积神经网络(2D-CNN),生成新的PC图像。

结果

结果表明,使用主成分合成算法进行特征选择的模型表现出相对稳定的分类性能。基于单一光谱特征的PLS-DA和1D-CNN的准确率分别为82.6%和83.3%(48 小时标记处)。相比之下,基于光谱纹理融合的PLS-DA和1D-CNN在相同的48h标记下准确率分别达到85.9%和91.3%。基于5张PC图像的2D-CNN准确率为82%。

A CNN model for early detection of pepper Phytophthora blight using multispectral

imaging, integrating spectral and textural information

Abstract

Background Pepper Phytophthora blight is a devastating disease during the growth process of peppers, signifcantly afecting their yield and quality. Accurate, rapid, and non-destructive early detection of pepper Phytophthora blight is of great importance for pepper production management. This study investigated the possibility of using multispectral imaging combined with machine learning to detect Phytophthora blight in peppers. Peppers were divided into two groups: one group was inoculated with hytophthora blight, and the other was left untreated as a control. Multispectral mages were collected at 0-h samples before inoculation and at 48, 60, 72, and 84 h after inoculation. The supporting software of the multispectral imaging system was used to extract spectral features from 19 wavelengths, and textural features were extracted using a gray-level co-occurrence matrix (GLCM) and a local binary pattern (LBP). The principal component analysis (PCA), successive projection algorithm (SPA), and genetic algorithm (GA) were used for feature selection from the extracted spectral and textural features. Two classifcation models were established based on efective single spectral features and signifcant spectral textural fusion features: a partial least squares discriminant analysis (PLS_DA) and one-dimensional convolutional neural network (1D-CNN). A two-dimensional convolutional neural network (2D-CNN) was constructed based on fve principal component (PC) coefcients extracted from the spectral data using PCA, weighted, and summed with 19-channel multispectral images to create new PC images.

Results The results indicated that the models using PCA for feature selection exhibit relatively stable classifcation performance. The accuracy of PLS-DA and 1D-CNN based on single spectral features is 82.6% and 83.3%, respectively,

at the 48h mark. In contrast, the accuracy of PLS-DA and 1D-CNN based on spectral texture fusion reached 85.9% and 91.3%, respectively, at the same 48h mark. The accuracy of the 2D-CNN based on 5 PC images is 82%.